티스토리 뷰

NFS 서버가 준비

아래 file은 vagrant 로 NFS 서버를 만들도록 준비!

openvpn 은 필요하신분만 vagrant로 사용해보도록 ...

openvpn_server.zip

0.00MB

k8s and nfs 외 6.zip

0.06MB

NFS 서버가 준비 완료 되면 PVC - PV 할수 있도록 테스트 해봅니다.

1. tommy_pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pc

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

nfs:

server: 192.168.29.14

path: /mnt/nfs_share

2. tommy_pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

3. PV와 PVC 바인딩 체크 !!

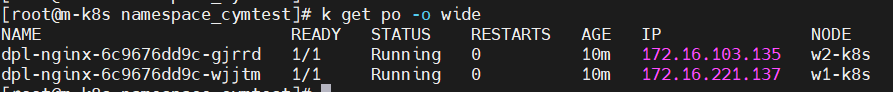

PVC를 사용하는 Deployment

4. pod deploy 생성 tommy_dep.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: dpl-nginx

spec:

selector:

matchLabels:

app: dpl-nginx

replicas: 2

template:

metadata:

labels:

app: dpl-nginx

spec:

containers:

- name: master

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /mnt

name: pvc-volume

volumes:

- name: pvc-volume

persistentVolumeClaim:

claimName: nfs-pvc5. mount 정보 확인

|

6. mount 정보 확인

아래 화면은 nginx pod 에 접속하여 mount된 디렉토리에 touch 테스트진행

|

아래 화면은 nfs-server 에서 해당 mount 된 디렉토리의 정보를 확인합니다.

|

Step 2! 스토리지 클레스 생성 하기

sudo systemctl restart nfs-fernel-server

sudo mkdir /nfs-volume

sudo cd /nfs-volume

rm /nfs-volume/* #안에 파일이 있다면 다 지워줌NFS Dynamic Provisioner 구성

https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

1. 깃허브에서 다운 받습니다.

git clone https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner.git

2. 다운받은 후 nfs-subdir-external-provisioner/deploy 경로로 이동합니다.

cd nfs-subdir-external-provisioner/deploy

3.rbac.yaml 파일을 생성합니다.

kubectl create -f rbac.yaml

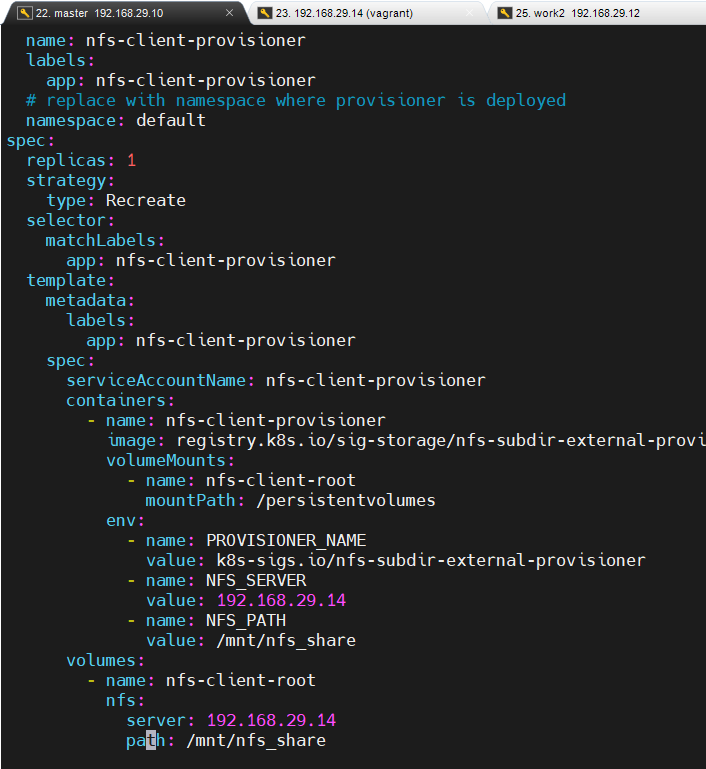

4.deploy 파일을 열어 수정합니다.

vi deployment.yaml

수정할 내용은 해당

...

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.29.14

- name: NFS_PATH

value: /mnt/nfs_share

volumes:

- name: nfs-client-root

nfs:

server: 192.168.29.14

path: /mnt/nfs_share1. rbac.yaml ServiceAccount 를 만들어 줍니다.

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io2. Deployment 를 만들어 줍니다.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.29.14

- name: NFS_PATH

value: /mnt/nfs_share

volumes:

- name: nfs-client-root

nfs:

server: 192.168.29.14

path: /mnt/nfs_share

kubectl create -f deployment.yaml

3. class.yaml 를 만들어 줍니다.

[root@m-k8s deploy]# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-client

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

#kubectl create -f class.yaml

[root@m-k8s deploy]# pwd

/home/namespace_cymtest/nfs-subdir-external-provisioner/deploy

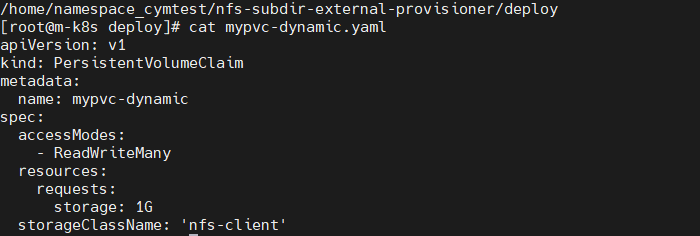

vi mypvc-dynamic.yaml4. pvc.yaml 를 만들어 줍니다.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mypvc-dynamic

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1G

storageClassName: 'nfs-client'

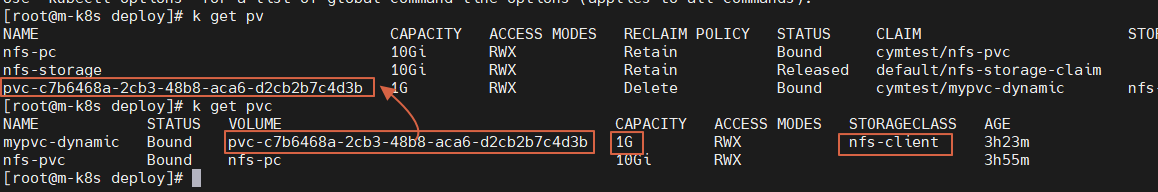

kubectl create -f mypvc-dynamic.yaml아래 내용은 m-k8s 인 마스터 node 에서 확인할수 있는 nfs 경로 입니다.

5. 아래 내용은 m-k8s 인 마스터 node 에서 확인할수 있는 nfs 경로 입니다.

6. 아래 내용은 w2-k8s Worknode2 에서 mount 된 정보 입니다.

worknode2 의 자동으로 마운트 된 모습도 볼수가 있습니다.

pvc 이렇게만 만들어지는 pvclass에 자동으로 만들어지는 것을 볼 수 있습니다.

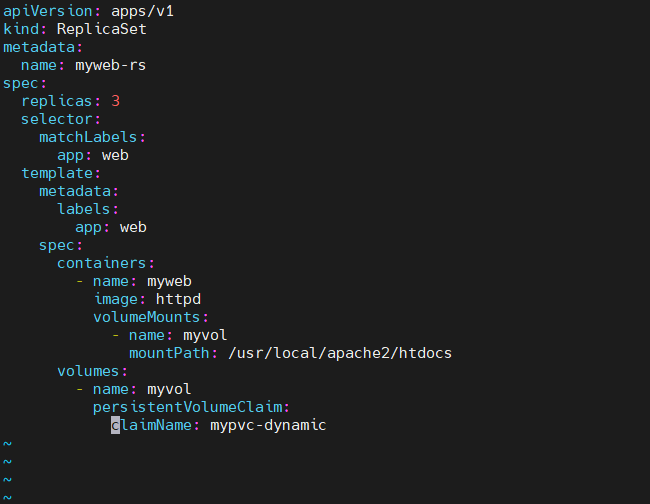

vi myweb-rs-dynamic.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: myweb-rs

spec:

replicas: 3

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: myweb

image: httpd

volumeMounts:

- name: myvol

mountPath: /usr/local/apache2/htdocs

volumes:

- name: myvol

persistentVolumeClaim:

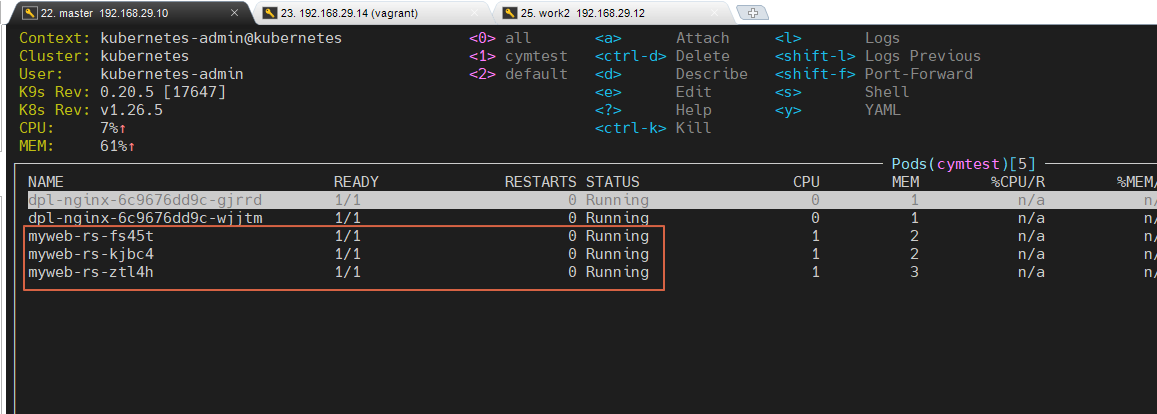

claimName: mypvc-dynamickubectl create -f myweb-rs-dynamic.yaml7. 아래 내용은 k9s 에서 myweb-rs 3개의 pod 를 볼수가 있습니다.

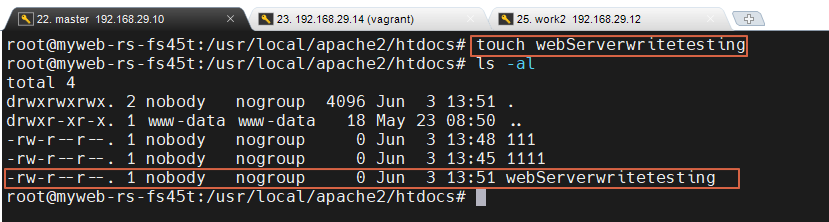

myweb-rs-xxx 웹서버 pod 에 직접 들어가서 아래와 같이 touch 로 하나 만들어 봅니다.

8. myweb-rs-xxx 웹서버 pod 에 직접 들어가서 아래와 같이 touch 로 하나 만들어 봅니다.

아래 내용은 NFS 서버의 경로를 들어 가보면.. webServerwritetesting 이 적혀 있는 모습을 볼수가 있게 됩니다.

9. 아래 내용은 NFS 서버의 경로를 들어 가보면.. webServerwritetesting 이 적혀 있는 모습을 볼수가 있게 됩니다.

kubectl delete -f . #서비스 삭제

kubectl get pv,pvc

### pvc를 지우면 pv가 같이 지워지는 것을 확인할 수 있다.10. 아래내용은 그냥 참고 하길 바랍니다. pod 에서 바로 nfs 붙여서 하는 방법은

아래5번을 확인해보면 됩니다.

끝!

2개의 NFS 만들어 보기!

1번 진행

$ vim nfs-prov-sa.yaml

kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-pod-provisioner-sa

---

kind: ClusterRole # Role of kubernetes

apiVersion: rbac.authorization.k8s.io/v1 # auth API

metadata:

name: nfs-provisioner-clusterRole

rules:

- apiGroups: [""] # rules on persistentvolumes

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-provisioner-rolebinding

subjects:

- kind: ServiceAccount

name: nfs-pod-provisioner-sa

namespace: default

roleRef: # binding cluster role to service account

kind: ClusterRole

name: nfs-provisioner-clusterRole # name defined in clusterRole

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-pod-provisioner-otherRoles

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-pod-provisioner-otherRoles

subjects:

- kind: ServiceAccount

name: nfs-pod-provisioner-sa # same as top of the file

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: nfs-pod-provisioner-otherRoles

apiGroup: rbac.authorization.k8s.io

*********************************************************************************

2번 진행

$ vim storageclass-nfs.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storageclass # IMPORTANT pvc needs to mention this name

provisioner: nfs-test # name can be anything

parameters:

archiveOnDelete: "false"

*********************************************************************************

3번 진행

$ vim pod-provision-nfs.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-pod-provisioner

spec:

selector:

matchLabels:

app: nfs-pod-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-pod-provisioner

spec:

serviceAccountName: nfs-pod-provisioner-sa # name of service account

containers:

- name: nfs-pod-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-provisioner-v

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME # do not change

value: nfs-test # SAME AS PROVISIONER NAME VALUE IN STORAGECLASS

- name: NFS_SERVER # do not change

value: 192.168.29.14 # Ip of the NFS SERVER

- name: NFS_PATH # do not change

value: /mnt/cym_nfs_share # path to nfs directory setup

volumes:

- name: nfs-provisioner-v # same as volumemouts name

nfs:

server: 192.168.29.14

path: /mnt/cym_nfs_share

*********************************************************************************

4번 진행

$ vim test-claim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc-test

spec:

storageClassName: nfs-storageclass # SAME NAME AS THE STORAGECLASS

accessModes:

- ReadWriteMany # must be the same as PersistentVolume

resources:

requests:

storage: 1Gi

*********************************************************************************

5번 진행

$vi yourweb-rs-dynamic.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: myweb-your

spec:

replicas: 3

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: myweb2

image: httpd

volumeMounts:

- name: myvol

mountPath: /usr/local/apache2/htdocs

volumes:

- name: myvol

persistentVolumeClaim:

claimName: nfs-pvc-test

*********************

$vi ng.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

volumeMounts:

- name: nfsvol1

mountPath: /nfs

readOnly: false

volumes:

- name : nfsvol1

nfs:

server: 192.168.29.14

path: /mnt/cym_nfs_share

참고사항! 기본 백업 클래스

vi ~/nfs-subdir-external-provisioner/deploy/class.yamlapiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-client

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"kubectl apply -f class.yamlkubectl get sc

NAME ...

nfs-client (default) ...

### 이름 뒤에 default가 붙는 것을 default 스토리지 클래스라고 한다.vi mypvc.yamlapiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mypvc-dynamic

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gkubectl create -f mypvc.yaml

댓글

공지사항

최근에 올라온 글

최근에 달린 댓글

- Total

- Today

- Yesterday

링크

TAG

- (InstantClient) 설치하기(HP-UX)

- startup 에러

- [오라클 튜닝] instance 튜닝2

- 키알리

- Oracle

- 스토리지 클레스

- 우분투

- 튜닝

- MSA

- 오라클 홈디렉토리 copy 후 startup 에러

- 트리이스

- ORACLE 트러블 슈팅(성능 고도화 원리와 해법!)

- 오라클 트러블 슈팅(성능 고도화 원리와 해법!)

- CVE 취약점 점검

- 쿠버네티스

- [오라클 튜닝] sql 튜닝

- 여러서버 컨트롤

- 설치하기(HP-UX)

- 버쳐박스

- pod 상태

- 5.4.0.1072

- 오라클 인스턴트클라이언트(InstantClient) 설치하기(HP-UX)

- ubuntu

- 코로나19

- 앤시블

- 오라클

- directory copy 후 startup 에러

- K8s

- 커널

- 테라폼

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 |

글 보관함